BLUFF - Multilingual Fake News Detection Benchmark

BLUFF Framework Overview

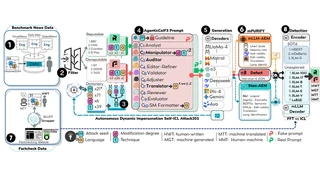

BLUFF Framework OverviewBLUFF (Benchmarking in Low-resoUrce Languages for detecting Falsehoods and Fake news) is the largest multilingual fake news detection benchmark, spanning 79 languages with over 202,000 samples. It addresses critical gaps in multilingual falsehood detection research by covering both high-resource “big-head” (20) and low-resource “long-tail” (59) languages.

The benchmark introduces two novel frameworks:

AXL-CoI (Adversarial Cross-Lingual Agentic Chain-of-Interactions) — A multi-agentic pipeline that orchestrates 10 fake chains and 8 real chains for controlled multilingual content generation using 19 diverse LLMs (13 instruction-tuned + 6 reasoning models).

mPURIFY — A 4-stage multilingual quality filtering pipeline with 32 features across 5 evaluation dimensions, using asymmetric thresholds to ensure dataset integrity.

Key features include:

- Four content types: Human-Written (HWT), Machine-Generated (MGT), Machine-Translated (MTT), and Human-Adapted Text (HAT)

- Bidirectional translation: English↔X with 4 prompt variants across 70+ languages

- 39 textual modification techniques with varying edit intensities

- Human-written data from 130 IFCN-certified fact-checking organizations across 57 languages

Experiments reveal state-of-the-art detectors suffer up to 25.3% F1 degradation on low-resource versus high-resource languages, highlighting the urgent need for equitable multilingual detection tools.